What is FP16 (“half”)?

FP16 (aka “half” floating-point) is the IEEE lower-precision floating-point representation that has recently begun to be supported by GPGPUs for compute (e.g. Intel EV9+ Skylake GPU, nVidia Pascal) while CPU support is still limited to SIMD conversion only (FP16C). It has been added to allow mobile devices (phones, tablets) to provide increased performance (and thus save power for fixed workloads) for a small drop in quality for normal 8-bbc (24-bbp) image and video.

However, normal laptops and tablets with integrated graphics can also benefit from FP16 support in same way due to relatively low graphics compute power and the need to save power due to limited battery in thin and light formats.

In this article we’re investigating the performance differences vs. standard FP32 (aka “single”) and the resulting quality difference (if any) for mobile GPGPUs (Intel’s EV9/9.5 SKL/KBL). See the previous articles for general performance comparison:

Image Processing Performance & Quality

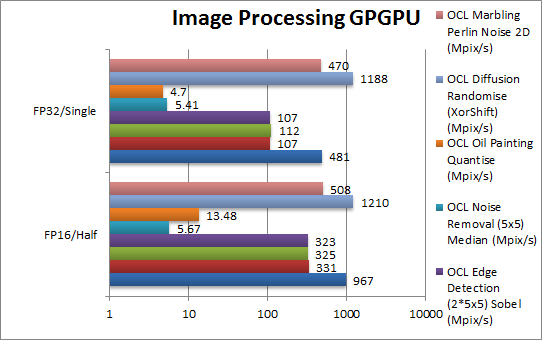

We are testing GPGPU performance of the GPUs in OpenCL, DirectX/OpenGL ComputeShader .

Results Interpretation: Higher values (MPix/s, MB/s, etc.) mean better performance.

Environment: Windows 10 x64, latest Intel drivers (April 2017). Turbo / Dynamic Overclocking was enabled on all configurations.

Other Image Processing relating Algorithms

Final Thoughts / Conclusions

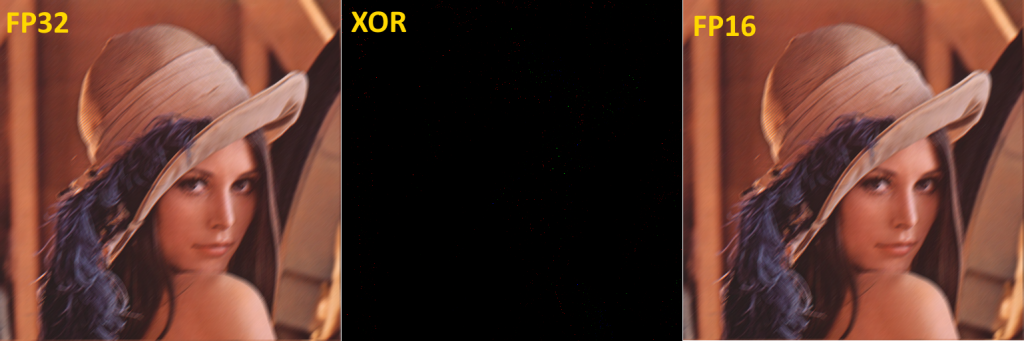

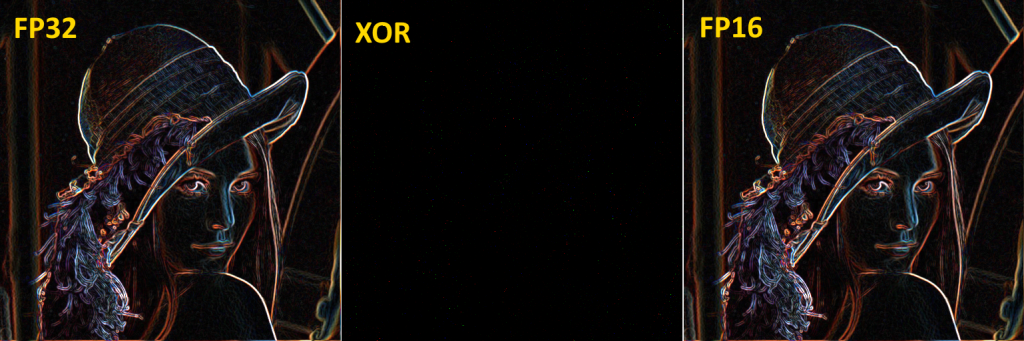

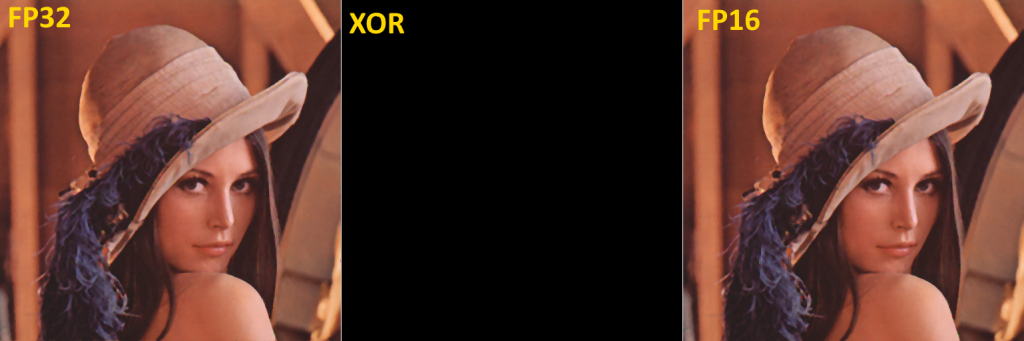

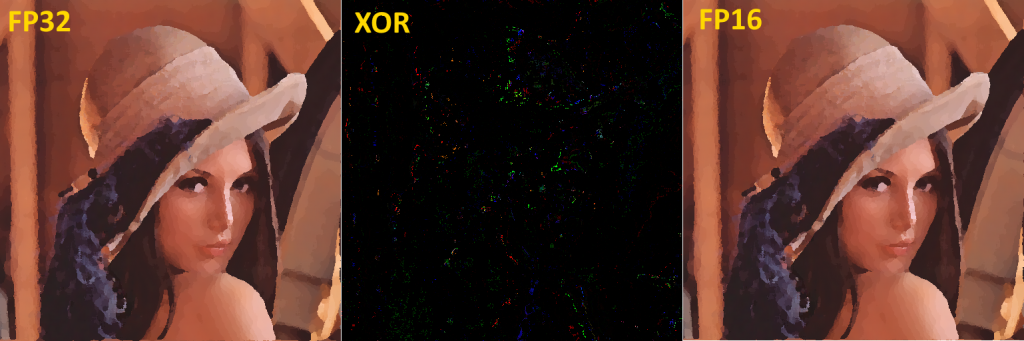

For many image processing filters (Blur, Sharpen, Sobel/Edge-Detection, Median/De-Noise, etc.) we see a huge 2-3x performance increase – more than we’ve hoped for (2x) – with little or no image quality degradation. Thus FP16 support is very much useful and should be used when supported.

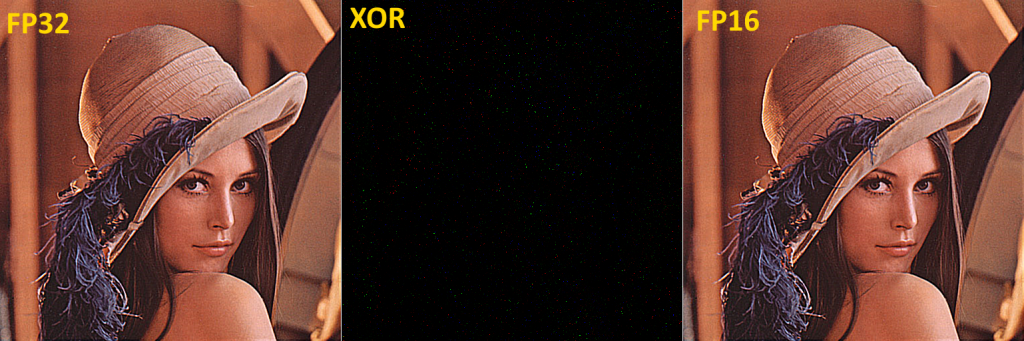

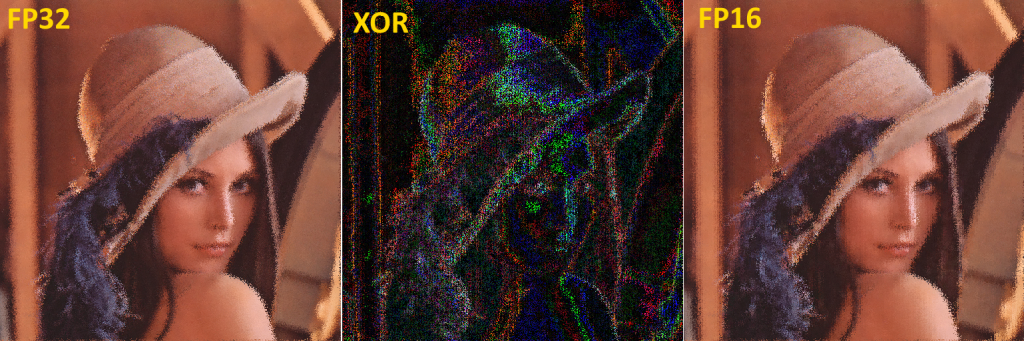

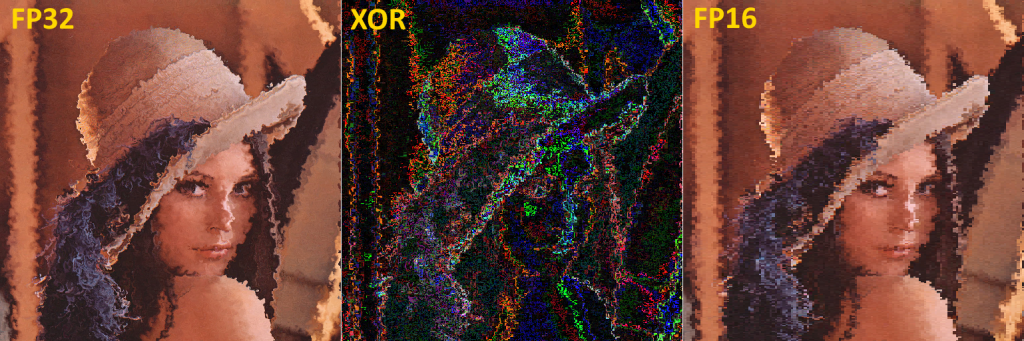

However for complex filters (Diffusion, Marble/Perlin Noise) the drop in quality is not acceptable for minor performance increase (2-8%); increasing the precision of more data items to improve quality (from FP16 to FP32) would further drop performance making the whole endeavour pointless.

For those algorithms that do benefit from FP16 the performance improvement with FP16 is very much worth it – so FP16 support is very useful indeed.

Pingback: SiSoftware Sandra Platinum updated to RTMa – SiSoftware